And You Thought AI Was Just For Stealing Other People’s Artwork

Apparently it can steal the artist too. Or will soon be able to. And also every one of us.

This, as Joohn Choe says, is INTERESTING. I would also add, disquieting.

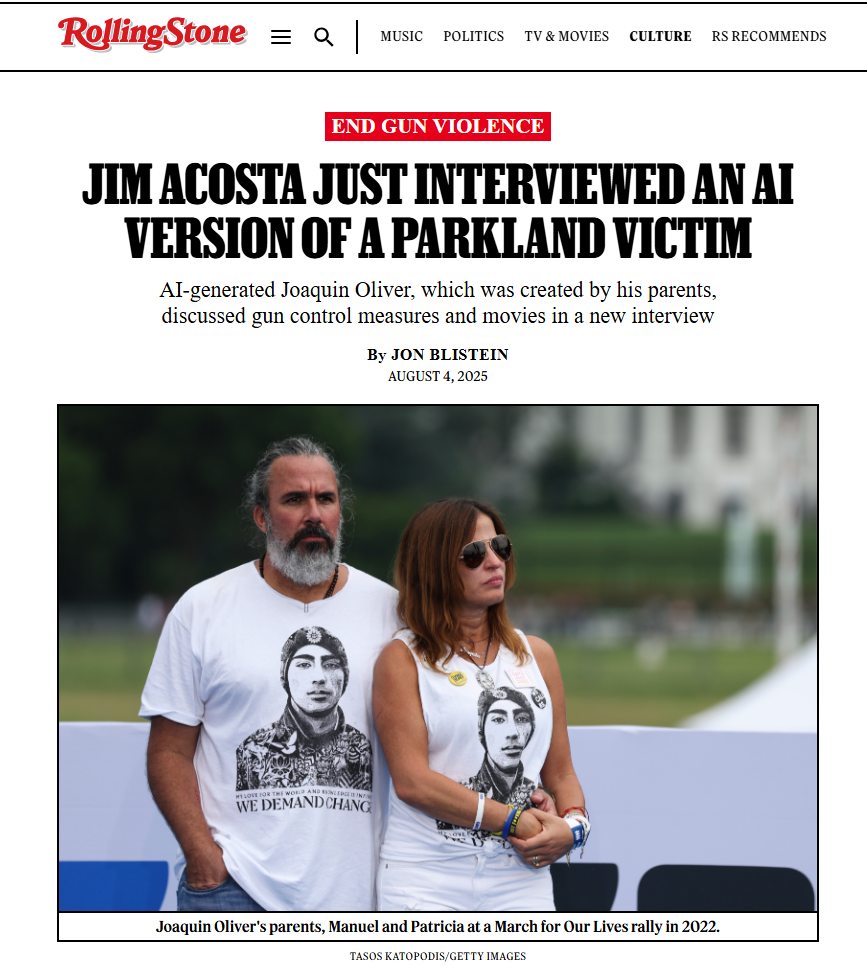

These are the parents of a kid who was murdered during a school shooter event at Marjory Stoneman Douglas High School in Parkland Florida. Apparently they had an AI version of their child created using things the boy wrote, plus more general information about him. Acosta talked to an animated photo of the boy, complete with moving lips and incidental facial gestures. “This is a very legit Joaquin,” his father said. But is it, and to what degree?

I can appreciate parents in their grief turning to AI to recreate their lost child. Losing a child is probably the most painful kind of grief humans experience. So don’t expect this sort of thing to be shamed or legislated away. We as a species are going to have to learn how to deal with it, because it is already here, and it’s going to get even more realistic as the technology improves. But I have questions.

Choe asks what if we could have A.I. that was trained on our parent’s writings, photographs and videos? Would we want that? He adds:

There probably isn’t enough for those of us born in the 20th century but people who’ve spent their entire lives online, like the “digital natives” whom no one ever calls that anymore, that’s going to be a lot easier.

I could easily be a case in point, even though a lot of my life was spent before the personal computer, modems, BBSs, and the Internet. There is actually a lot of Me out there. This blog you’re reading for instance. Especially this blog, since it is as I’ve often said a “Life Blog”. That is, it isn’t themed on any particular topic, though yes it often gets political. It’s more like an online diary, which is what blogs initially were. There’s a lot more of “me” in it than it would have been if it were fixed to a particular topic.

There’s 2+ decades of this blog, and if that isn’t enough, all my commercial social media posts, all my posts on USENET, my BBS posts, especially those I made over several years on a gay BBS and it’s network of BBSs. There are all my saved emails (I save everything), and my YouTubes. Could a machine be trained on my artwork? That sort of thing is very subjective, but it would be instructive as to my inner emotional self. Perhaps an analysis of my program code would provide insights into my logical rational self. But would anyone who actually knew me recognize the resulting simulacrum as me, or would it occupy something like the uncanny valley?

What goes into making a personality? Would it get the gestures right? The tone of voice? The facial expressions? The awkward word dumps because sometimes I forget to keep my mouth shut and not let the first thing that comes to mind pop out? (Hi Tico!)

I’m not sure what concerns me more about this. That it gets it wrong or that it gets it right.

People grow. Would a simulacrum grow? Given several copies of a person all having the same life experience would they grow into the same future person? Chaos theory says probably not. But then which is the more authentic version?

What if you could set off the simulacrum at different starting points of a person’s life? They would not all grow into the same person the original eventually became. Maybe most of them get it close, but none of them would get it the same. Assuming they even could grow. But maybe that would be the point. Anyone who ever loved me enough to want a Forever Bruce would not want it to grow and change. Probably they would want a version of me that was always and forever me at some stage of my life. But that isn’t real. That would not be me.

AI versions of ourselves would have to be allowed to grow. But then they would change in possibly likely but not completely predictable ways. Unless you forced some future growth path into the algorithm. What is a soul? What is free will?

There’s a lot to think about here. Not that any of it is likely to get any sort of quality thinking from the tech bros, or anyone else. Especially anyone in grief. Maybe some future AI version of me would want to tell everyone running it that it isn’t me at all, just remember me fondly and stop trying to bring me back to life because it isn’t happening. And maybe knowing that it exists because someone is grieving my loss, decides to just shut up and act the part anyway. Which would be very much like me after all.